What are Quantum Chips?

/ 24 min read

Table of Contents

Introduction

Amazon Web Services (AWS) has unveiled its first quantum computing chip, called Ocelot, marking the company’s entry into quantum hardware. Ocelot is a prototype designed to tackle one of the biggest hurdles in quantum computing – the high error rates – by using an innovative approach to quantum error correction built directly into the chip. Announced in February 2025, Ocelot uses a novel “cat qubit” architecture (inspired by Schrödinger’s cat) that intrinsically suppresses certain errors, which could reduce the resources needed for error correction by up to 90% compared to conventional methods. To understand why this is significant, let’s break down the key concepts: what qubits are, why quantum error correction is crucial, how cat qubits work, what the Ocelot chip’s design entails, and what Amazon’s leap into quantum computing means for the industry.

Qubits vs. Classical Bits: What’s the Difference?

In classical computing, bits are the fundamental units of information and can exist only in one of two states: 0 or 1. Qubits (quantum bits), on the other hand, are the basic units of quantum information and can exist in a superposition of 0 and 1 at the same time. In simple terms, a qubit can be thought of like a spinning coin that is temporarily both heads and tails until you look at it. This property allows qubits to hold and process more information than classical bits because a collection of qubits can represent many combinations of 0/1 simultaneously. Qubits can also become entangled with one another, meaning the state of one qubit can depend on the state of another no matter how far apart they are – a counterintuitive quantum phenomenon with no classical equivalent. Thanks to superposition and entanglement, a quantum computer can, for certain problems, explore a vast number of possibilities in parallel, potentially solving some problems much faster (even exponentially faster) than any classical computer. This is why quantum computing is so promising for tasks like cryptography, complex optimization, and simulating molecular chemistry.

However, qubits are extremely sensitive and fragile. Unlike a sturdy classical bit (like a transistor on a chip) that reliably stays 0 or 1, a qubit’s delicate quantum state can be disturbed by the slightest interaction with its environment – a phenomenon known as quantum decoherence. Factors such as thermal vibrations, electromagnetic noise, or stray light can nudge a qubit out of its quantum state. As a result, qubits are unstable and error-prone: they require carefully controlled conditions (such as ultra-cold temperatures near absolute zero) to operate reliably. If you measure a qubit, its superposition “collapses” to a definite 0 or 1, and if any interference occurs before or during a computation, errors can creep in. In summary, while qubits offer extraordinary capabilities beyond classical bits, they also introduce new challenges because of how easily they can lose their quantum information. This fragility is the central problem that researchers must overcome to build practical quantum computers.

The Need for Quantum Error Correction (QEC)

Because qubits are so error-prone, today’s quantum computers can only perform on the order of a thousand quantum operations (gates) before errors accumulate and corrupt the results. Yet, useful quantum applications (like breaking certain cryptographic codes or simulating complex molecules) would require billions of gate operations, far beyond what current hardware can handle without mistakes. This huge gap between what’s needed and what’s currently possible is often called the “quantum performance gap.” How can we bridge this gap? The answer is quantum error correction (QEC).

QEC is the quantum counterpart of the error-correcting techniques used in classical computing and communications (such as parity checks or redundancy for detecting/correcting bit flips). The core idea, proposed in the mid-1990s, is to encode a single “logical” qubit of information into multiple physical qubits in such a way that if some of those qubits suffer errors, the original information can still be recovered. In other words, the data is shared across multiple qubits so that the system can detect and fix errors on the fly, without disturbing the encoded information. Just as a classical digital system might add extra bits for redundancy (allowing it to detect if a bit was flipped from 0 to 1 and correct it), a quantum computer can add extra qubits and entangle them in special states that allow certain errors to be spotted and corrected by a series of measurements and corrective operations. Effective quantum error correction is the key to achieving reliable, large-scale quantum computing – it’s how we hope to go from today’s rudimentary quantum processors to tomorrow’s fault-tolerant quantum machines that can run complex algorithms for days or weeks.

The challenge, however, is that quantum error correction comes at a steep cost in hardware. To correct errors, a quantum computer must use many physical qubits to represent a single high-quality logical qubit. In fact, traditional QEC schemes like the surface code (used in many superconducting quantum chips) might require thousands of physical qubits per logical qubit to get error rates low enough for useful computations. This means a full-scale, commercially relevant quantum computer could need millions of physical qubits, given that practical applications may demand on the order of 1000 logical qubits or more. Today’s quantum devices only have tens or at most a few hundred qubits, so the scale-up required is enormous. One fundamental reason for this high overhead is that quantum bits can fail in more ways than classical bits. A classical bit has one type of error to worry about – a bit-flip (0 turning into 1 or vice versa). A qubit, by contrast, can suffer a bit-flip error and a phase-flip error. A phase-flip is a uniquely quantum error where the qubit’s phase (the part of the quantum state that distinguishes between |0⟩ and |1⟩ in superposition) is altered; it has no analogue in classical bits. Because qubits have this extra failure mode, quantum error correction schemes must introduce extra redundancy to handle both bit-flips and phase-flips. This makes QEC markedly more resource-intensive than classical error correction. For example, a good classical error-correcting code might only add, say, 20-30% more bits to achieve a very low error rate, whereas a quantum code like the surface code can demand 10,000% overhead or more (i.e. 100× or more qubits) to reach comparable error rates!

This huge qubit overhead is a major roadblock for quantum computing. Every additional physical qubit also needs control electronics, cooling, and introduces more possible points of failure. Thus, researchers are intensely focused on finding more hardware-efficient QEC approaches – ways to reduce the number of physical qubits needed per logical qubit. If we can lower that overhead, building a large-scale quantum computer becomes much more feasible. This is exactly where Amazon’s Ocelot chip makes a contribution: it demonstrates a new architectural approach that significantly cuts down the qubit overhead required for error correction. Before we get into Ocelot’s design, we need to understand the special kind of qubit it uses: the “cat qubit.”

What Are “Cat Qubits” and How Do They Help?

Cat qubits are a novel type of qubit named after the famous Schrödinger’s cat thought experiment (where a cat in a box is in a quantum limbo of being simultaneously alive and dead). In simple terms, a cat qubit is implemented not by a single two-state system, but by a quantum oscillator (such as a mode of electromagnetic field in a microwave resonator) that can occupy multiple energy states. While a normal qubit has two basis states (|0⟩ and |1⟩), a bosonic qubit like a cat qubit uses two special states of a quantum oscillator to represent |0⟩ and |1⟩. For instance, these could be two distinguishable combinations of many photons in a microwave cavity. The name “cat” comes from the fact that the states used might be superpositions of two classical-like states (analogous to the “alive” and “dead” states of Schrödinger’s hypothetical cat). In practice, one can create a “cat state” in an oscillator which is a quantum superposition of two opposite-phase oscillation states. These behave a bit like the cat being in two states at once.

1. What is Schrödinger’s Cat?

Schrödinger’s cat is a famous quantum mechanics thought experiment that illustrates the concept of superposition. In this hypothetical scenario:

- A cat is placed inside a closed box with a mechanism that has a 50% chance of releasing poison (which would kill the cat) and a 50% chance of doing nothing.

- Until we open the box to check, quantum mechanics suggests that the cat exists in a superposition of both “alive” and “dead” states at the same time.

- The cat’s true state (either alive or dead) is only determined when we observe (measure) it.

This paradox highlights a fundamental property of quantum systems: they can exist in multiple states at once until they are measured.

2. How Are Cat Qubits Different From Regular Qubits?

A normal qubit (like those in superconducting quantum computers) exists in two states:

|0⟩(similar to a classical 0)|1⟩(similar to a classical 1)- Or a superposition of both (

α|0⟩ + β|1⟩), meaning the qubit has some probability of being in both states simultaneously.

A cat qubit, however, is implemented in a different way. Instead of using a single two-state system, it is created using a quantum oscillator—a system that can have many different energy levels.

For example:

- In superconducting circuits, this oscillator can be a microwave resonator, which is a device that traps and sustains electromagnetic waves.

- This oscillator can hold many photons (particles of light), and its quantum state can be used to represent a qubit.

3. How Do Cat Qubits Work?

Instead of storing |0⟩ and |1⟩ in a single atomic or superconducting circuit state, cat qubits encode quantum information using two distinct states of an electromagnetic field:

- These states are often called “coherent states” (special states of light inside the resonator).

- The two chosen states have opposite phases (they oscillate in opposite directions).

To draw an analogy:

- Think of a pendulum that can swing in two opposite directions.

- One direction represents

|0⟩, and the other represents|1⟩. - But, just like Schrödinger’s cat, the system can also exist in a quantum superposition of both directions simultaneously.

The key takeaway is that the two chosen states in the oscillator act as the two states of the qubit, but they are more stable against errors than traditional qubits.

4. Why Are They Called “Cat Qubits”?

- The term “cat qubit” comes from the fact that these states are superpositions of two distinct, classical-like states (just like Schrödinger’s cat being “alive” and “dead” at the same time).

- These states are sometimes called “Schrödinger cat states” because they behave like a macroscopic quantum superposition.

5. Why Are Cat Qubits Useful?

One of the biggest challenges in quantum computing is quantum error correction. Traditional qubits are highly prone to errors because:

- They can undergo bit-flip errors (flipping between

|0⟩and|1⟩). - They can undergo phase-flip errors (their superposition state changes unpredictably).

Cat qubits help solve this problem:

- They are designed so that bit-flip errors happen exponentially less often (because the two coherent states are well separated in phase space).

- This means they are more stable and need less frequent error correction.

This is why AWS is using cat qubits in its new Ocelot quantum chip—because they make quantum error correction much more efficient.

The big advantage of cat qubits is that they exhibit an intrinsic protection against certain errors. In particular, cat qubits can be engineered to strongly resist bit-flip errors. Because the two states of the oscillator (defining 0 and 1) are usually well separated in phase space, a random perturbation is highly unlikely to accidentally turn one into the other – it would require a large, coherent change (like adding or removing many photons at once). In technical terms, increasing the number of photons in the cat qubit’s oscillator makes the bit-flip error rate exponentially small. It’s as if making the cat “bigger and louder” makes it much harder for it to inadvertently flip between being alive and dead. This means that instead of fighting bit-flip errors by redundantly encoding multiple qubits, you can simply pump more energy (photons) into the oscillator to stabilize it. Phase-flip errors, on the other hand, can still occur more readily (they correspond to subtle shifts in the oscillator’s phase), so the cat qubit primarily turns the qubit’s error profile into one where phase flips are the dominant type of error and bit flips are extremely rare. This imbalance is often called a biased noise or biased error qubit.

Why is this helpful? Because if your physical qubits hardly ever flip bits, you only need to actively correct the phase-flip errors. You’ve effectively reduced the problem to something closer to classical error correction (dealing with one type of error) which can be done with far fewer resources. In summary, cat qubits “spend” photons instead of qubits to achieve error suppression. This makes quantum error correction far more efficient: you don’t need thousands of physical qubits to protect one logical qubit if each cat qubit is already very robust by itself. Researchers have been exploring bosonic qubits like cat qubits for the past decade in laboratories, demonstrating that they can greatly prolong the lifetime of quantum information (by suppressing bit-flips) in single-qubit experiments. The open question was whether this approach could be scaled up into a multi-qubit system and integrated into a practical computing architecture. This is exactly what AWS’s Ocelot chip set out to prove.

AWS built Ocelot as a test of a scalable bosonic error-corrected architecture, using cat qubits as the building blocks. By using cat qubits, Ocelot aims to dramatically reduce the qubit overhead required for error correction, since each qubit is inherently more reliable. In fact, Amazon notes that cat qubits “intrinsically suppress certain forms of errors, reducing the resources required for quantum error correction”. The Ocelot chip is the first prototype to combine multiple cat qubits and the necessary error-correction circuitry on a single microchip.

Inside the Ocelot Chip: Architecture and Breakthroughs

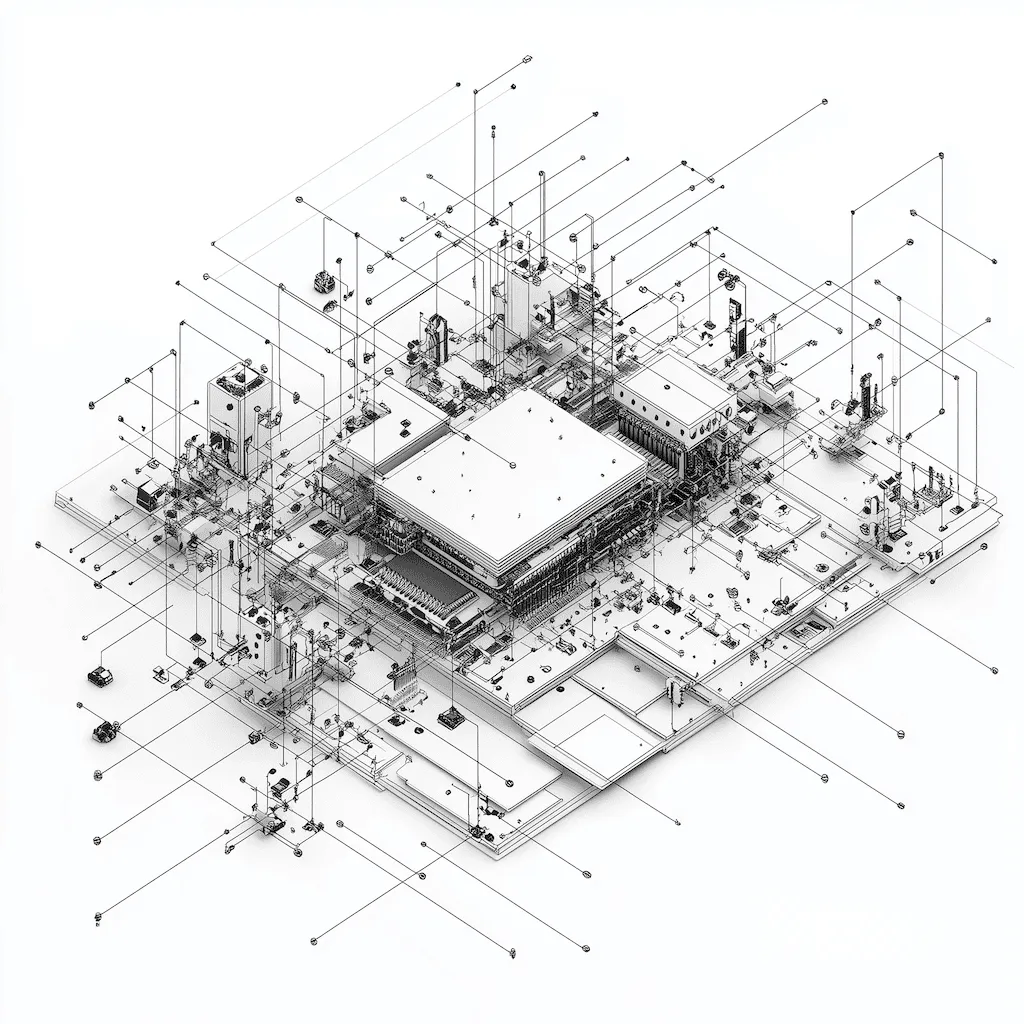

The Ocelot quantum chip prototype, composed of a pair of silicon microchips that together serve as one logical-qubit memory. This design integrates quantum error correction at the hardware level using the cat-qubit architecture to suppress errors. Amazon’s team estimates that such an approach could use only around one-tenth the number of physical qubits that conventional quantum error correction would require for the same level of performance.

The Ocelot device is essentially a logical qubit implemented with multiple physical qubits – it’s like a very small quantum computer dedicated to storing a single protected qubit of information. Physically, Ocelot consists of two bonded silicon chips (stacked one on top of the other) forming a single module. On these chips reside the quantum circuits: specifically, five cat qubits serve as the data qubits that hold the quantum information, and they are accompanied by a set of ancillary qubits and circuits for error detection. The architecture can be viewed as a quantum memory where the information is redundantly encoded in the five cat qubits (this is sometimes called a repetition code of length 5). Each cat qubit is realized by a superconducting microwave resonator (oscillator) with a “nonlinear buffer” circuit that helps stabilize the cat state (this keeps the qubit in those two desired oscillator states and suppresses bit-flips). Additionally, each cat qubit is connected to a couple of conventional superconducting qubits known as transmons (transmon qubits are the standard qubit type used in many quantum labs, like those at Google and IBM). These transmons act as ancilla (helper) qubits that interact with the cat qubits to detect any errors, particularly phase-flip errors.

At a high level, Ocelot’s quantum error correction scheme works as follows :

-

Bit-flip errors are prevented at the source: The cat qubits are designed so that bit-flips (analogous to a qubit flipping from |0⟩ to |1⟩) occur extremely rarely – effectively exponentially less often than in a normal qubit. This is achieved by the nature of the cat state (with an average of ~4 photons in Ocelot’s case) which makes the two logical states of the qubit very robustly separated. In practice, the Ocelot team reported bit-flip event times on the order of one second, which is over a thousand times longer than the lifetime of a typical superconducting qubit. One second may not sound long, but in the quantum world it’s an eternity – millions of operations can be done in that time.

-

Phase-flip errors are corrected actively: Since phase errors can still happen on the cat qubits (on the scale of tens of microseconds for Ocelot, which is much shorter than bit-flip times), Ocelot uses a simple repetition error-correcting code across the five cat qubits to catch and fix these errors. Essentially, the logical qubit’s state is encoded redundantly such that if one of the five qubits experiences a phase flip, it can be detected by comparing it to the others. The transmon ancilla qubits perform measurements in a clever way to flag any qubit that has deviated (flipped phase) without collapsing the overall quantum information. This repetition code is the quantum analog of a very simple classical error-correcting code that votes out errors by redundancy.

-

“Noise-biased” gates preserve the protection: A challenge in any error-correcting code is that the operations used to check for errors can themselves introduce new errors. Ocelot addresses this by using specially engineered controlled-NOT (CNOT) gates between the cat qubits and the transmon ancillas that are bias-preserving. In other words, these gates are designed not to disturb the delicate bias (i.e., they don’t inadvertently cause bit-flips on the cat qubits while detecting phase-flips). AWS reports that these are the first implementation of noise-biased gates in a quantum chip , and they were key to making the error detection possible without losing the inherent protection of the cat qubits.

Putting it together, the five cat qubits plus their ancillas operate as one robust logical qubit memory. In an experiment reported in Nature by Amazon’s team, they ran sequences of error-correction cycles on Ocelot to test how well this logical qubit is preserved. They compared a distance-3 code (using 3 cat qubits in the repetition code) versus a distance-5 code (all 5 cat qubits) to see the benefit of scaling up. As expected, the logical error rate dropped significantly when going from 3 to 5 cat qubits in the code. In particular, the probability of a phase-flip error happening on the encoded logical qubit was much lower with the larger code, demonstrating that the error correction was indeed working – adding more qubits (to a point) made the logical qubit more reliable.

Perhaps most impressively, even when accounting for potential bit-flip errors, the larger 5-qubit code achieved almost the same overall error rate as the 3-qubit code (actually slightly better). Normally, adding more qubits could introduce more avenues for error (since each qubit could flip), but thanks to the cat qubits’ bias and the protective gating, Ocelot did not suffer a net penalty for using more qubits. The AWS researchers observed about a 1.65% error per error-correction cycle with the 5-qubit code, versus 1.72% with the 3-qubit code – essentially the same, meaning the extra qubits were pure gain in catching phase errors without introducing additional bit-flip errors. This is a strong validation of the hardware-efficient approach: the error-correcting benefits scaled as expected.

A key outcome of Ocelot is the dramatic reduction in qubit overhead it demonstrates. The entire Ocelot logical qubit (distance-5 repetition code) uses 5 data qubits + 4 ancilla qubits = 9 qubits in total. By contrast, the leading conventional scheme (the surface code) would require on the order of 49 physical qubits to encode a single logical qubit with comparable error protection (in surface code, a code distance of 5 typically involves a 5×5 grid of 25 data qubits plus additional ancillas for readout). In other words, Ocelot achieved a certain level of error correction with less than one-fifth the number of qubits that would normally be needed. This is the “hardware efficiency” claim in action. It implies that if we scale up this approach, we might build a quantum computer with, say, a few hundred thousand physical cat qubits to get thousands of logical qubits, instead of needing millions of physical qubits. That’s a huge difference in engineering complexity and cost. Indeed, Amazon’s researchers estimate that scaling up Ocelot’s architecture could reduce the overhead for error correction by up to 90% compared to the conventional approaches at similar error rates.

It’s important to note that Ocelot is a first-generation prototype – essentially a proof of concept. It demonstrates that bosonic cat qubits can be integrated and made to work together for error correction on a chip. The results are encouraging: Ocelot achieved state-of-the-art performance for superconducting quantum hardware in terms of qubit stability (bit-flips ~1s, phase-flips ~20µs) , and it showed the feasibility of a bias-preserving error-corrected qubit. Moving forward, the plan is to improve on this design. Future versions of Ocelot are already in development, aiming to drive down the logical error rates exponentially by both refining the components (longer-lived qubits, better gates) and increasing the code distance (using more cat qubits in the repetition code). As those refinements come, one can run many error-correction cycles in a row and watch the logical error rate fall lower and lower – eventually to the point of being astronomically small, which is the threshold for truly fault-tolerant computing. The Ocelot architecture will serve as a fundamental building block in AWS’s quest to build a full-fledged quantum computer that is fault-tolerant, meaning it can run any length of computation without accumulating errors.

Amazon’s Entry into Quantum Computing: Broader Implications

Amazon’s unveiling of the Ocelot chip is a significant moment in the quantum computing field. It signifies that yet another tech giant – alongside the likes of Google, IBM, and Microsoft – is not only investing in quantum computing research but is now developing its own custom quantum hardware. In fact, the announcement of Ocelot came just about a week after Microsoft revealed its new “Majorana” quantum chip based on a topological qubit approach, and a couple of months after Google announced its advanced Willow processor that achieved a landmark computational feat. The quantum computing race is heating up, with recent breakthroughs coming from multiple fronts. Amazon’s entry through AWS can be seen as part of this wave of rapid progress that has some experts calling this period a potential “quantum tipping point”. As AWS’s Oskar Painter (lead quantum hardware engineer and one of Ocelot’s creators) put it, “What makes Ocelot different and special is the way it approaches the fundamental challenge we have with quantum computers, and that is the errors that they’re susceptible to.” By focusing squarely on error correction and hardware efficiency, Amazon is attacking the hardest barrier to useful quantum computing from the start.

The broader implication of Amazon’s approach is that it might accelerate the timeline for achieving practical, scalable quantum computers. By potentially cutting down the qubit requirements (and thus the complexity and cost) by an order of magnitude , Amazon’s strategy could make it easier to scale quantum hardware to sizes that perform meaningful tasks. This is a different philosophy than simply trying to build a slightly bigger quantum chip each year. It’s more about finding the right architecture now – akin to how the invention of the transistor (a more scalable hardware element) was what enabled classical computers to eventually incorporate billions of components. If cat qubits and similar approaches prove out, they might become the preferred building blocks for large quantum machines, much like transistors replaced vacuum tubes in the classical era. Amazon’s Ocelot is one of the first demonstrations of an alternative quantum error correction architecture that could compete with (or complement) the traditional schemes being pursued by others.

It’s also noteworthy that Amazon’s quantum hardware effort is centered at the AWS Center for Quantum Computing, which is a partnership with Caltech – indicating a strong academia-industry collaboration. The results were published in a peer-reviewed journal (Nature), lending scientific credibility to the claims. Experts in the field have reacted positively to the news. For example, Rob Schoelkopf, a prominent quantum physicist and cofounder of a quantum startup, noted that Amazon’s results “highlight how more efficient error correction is key to ensuring viable quantum computing” and called it “a good step toward exploring and preparing for future roadmaps” in quantum technology. In other words, Amazon is pushing the community to think about error correction efficiency, not just qubit count. There’s a healthy diversity of approaches now: while Amazon and Google are working with superconducting circuits (Google largely using traditional transmon qubits with surface codes, and Amazon using cat qubits with bosonic codes), Microsoft is betting on exotic topological qubits (Majorana particles) that, if they work as hoped, might suppress errors by physics alone. Each approach has the same goal – reduce error rates and overhead – but via different means. It’s too early to say which approach will win out, or if they’ll coexist for different applications, but having multiple shots on goal increases the chance that one will succeed in making quantum computing practical.

From an industry perspective, Amazon’s move also underscores the importance of cloud providers in the future of quantum computing. AWS already operates Amazon Braket, a cloud service that lets customers experiment with quantum algorithms on various quantum hardware back-ends (supplied by other companies). With Ocelot and its successors, Amazon is clearly gearing up to eventually provide its own quantum processing units on the cloud. This could integrate with AWS’s massive cloud infrastructure, potentially allowing hybrid classical-quantum computing workflows at scale. In the bigger picture, Amazon’s entry means more competition and investment in the quantum race, which can spur faster innovation. Each milestone – whether from Amazon, Google, IBM, Microsoft, or others – is pushing the technology forward and showing that progress, while difficult, is being made.

It’s important to temper the excitement with realism: Ocelot is a laboratory prototype, not a commercial device. As Troy Nelson, a tech officer in quantum-safe cybersecurity, remarked about these developments, “There’s lots of challenges ahead. What Amazon gained in error correction … was a trade-off for the complexity and sophistication of the control systems and readouts from the chip. We’re still in prototype days, and we still have multiple years to go, but they’ve made a great leap forward.”. In other words, Amazon solved one piece of the puzzle (error correction efficiency) but in doing so, introduced new engineering complexity that will need to be refined. Building a full-scale quantum computer will require scaling this up by orders of magnitude, improving the reliability even further, and integrating with classical control systems – all of which are non-trivial tasks. Some skeptics, like the CEO of Nvidia, have suggested truly useful quantum computers could still be 20 years away in a worst-case scenario.

Nonetheless, the consensus in the community is that we have entered a new era where fundamental obstacles to quantum computing (like error correction) are starting to be addressed in real devices. Amazon’s Ocelot chip contributes to that momentum. It demonstrates a promising route to making quantum computers more resource-efficient and scalable, bringing us a step closer to the long-term goal of a fault-tolerant quantum computer. With heavyweights like Amazon now fully in the game – alongside other tech giants and startups – the race to achieve quantum advantage for practical problems is more energized than ever. Each breakthrough, like the Ocelot chip, builds confidence that the immense challenges of quantum computing can be met, and it brings the day closer when quantum computers will tackle problems that even the best classical supercomputers cannot solve.

In summary, Amazon’s Ocelot chip is more than just a new piece of hardware – it’s a proof-of-concept that we can dramatically improve quantum error correction through clever engineering. It showcases the viability of cat qubits as a platform for scalable quantum computing, potentially rewriting the roadmap for how we build quantum machines. And it signals that Amazon is poised to be a major player in the quantum computing revolution, which ultimately benefits the entire field by driving innovation and competition. The journey to practical quantum computing is far from over, but with developments like these, the future is looking ever more quantum.

Sources:

- Nature (2025)Hardware-efficient quantum error correction via concatenated bosonic qubits

- Brandão, F. & Painter, O. (2025). Amazon announces Ocelot quantum chip. Amazon Science Blog (Amazon announces Ocelot quantum chip - Amazon Science)

- Amazon Staff (2025). AWS announces a new quantum computing chip. AboutAmazon News (Amazon’s new Ocelot chip brings us closer to building a practical quantum computer) (Press release summarizing Ocelot’s features and 90% error-correction overhead reduction).

- Business Insider (2025). Amazon joins the quantum computing race, announcing new ‘Ocelot’ chip. (Amazon Debuts Ocelot Chip, Joining Quantum Race With Google, Microsoft - Business Insider) (Coverage of Ocelot with expert commentary on its significance and comparison to other companies’ efforts).

- Which one of the following is the context in which the term “qubit” is mentioned?

- Quantum computing: When the right direction is random | ASU News

- Neutral Atoms Quantum Computing for Physics-Informed Machine Learning - Pasqal

- Physicists found the shortest measurement to collapse a quantum state | New Scientist