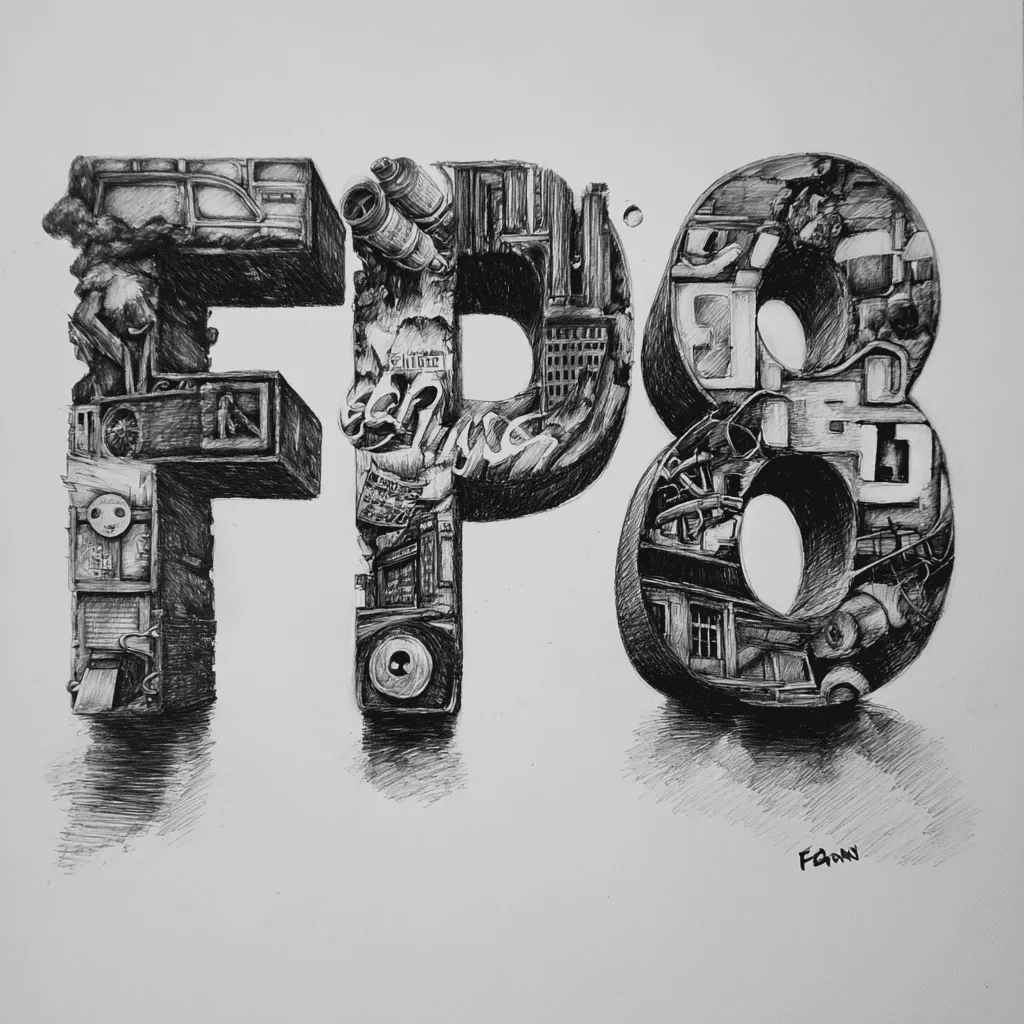

UE8M0 FP8 Number Format

/ 8 min read

Table of Contents

Training a 671-billion parameter AI model requires massive computational resources—typically hundreds of H100 GPUs costing millions of dollars. But what if you could achieve similar results with half the memory and double the throughput using a clever 8-bit number format?

DeepSeek’s UE8M0 FP8 represents a breakthrough in efficient AI training, enabling massive language models to run on alternative hardware while maintaining competitive performance. This specialized 8-bit floating-point format trades precision for unprecedented efficiency, allowing models like DeepSeek-V3.1 to be trained without relying entirely on expensive Nvidia hardware.

But UE8M0 isn’t just about cost savings—it’s a fundamental rethink of how we represent numbers in AI computation, prioritizing range over precision in ways that challenge conventional wisdom about floating-point arithmetic.

The FP8 Landscape

First, it’s helpful to understand where UE8M0 fits in the growing family of 8-bit floating-point formats:

| Format | Sign | Exponent | Mantissa | Key Characteristic | Primary Use |

|---|---|---|---|---|---|

| E4M3 | 1 bit | 4 bits | 3 bits | Balanced precision/range | General AI training |

| E5M2 | 1 bit | 5 bits | 2 bits | Extended range | Large dynamic ranges |

| UE8M0 | 0 bits | 8 bits | 0 bits | Maximum range, powers of 2 only | Scaling factors |

UE8M0 stands out as the most extreme design choice—eliminating both sign and mantissa bits to maximize the exponent range. This makes it uniquely suited as a scaling factor rather than a general-purpose number format.

Breaking Down UE8M0 FP8

The name UE8M0 describes the structure of the format:

- U (Unsigned): The format does not include a sign bit. This trade-off eliminates negative numbers but extends the range of positive values.

- E8 (Exponent 8): Eight bits are allocated to the exponent, giving the format a wide dynamic range to represent values of vastly different magnitudes.

- M0 (Mantissa 0): No bits are used for the mantissa (or significand). This extreme choice maximizes range at the expense of precision.

The Scaling Factor Strategy

UE8M0’s unique design makes it ideal for a specific role in AI training: scaling factors. Here’s how this works:

Mixed-Precision Training

Instead of doing all computation in FP8, UE8M0 enables a clever mixed-precision approach:

- Core computation happens in FP8 (fast, memory-efficient)

- Scaling factors use UE8M0 to maintain numerical stability

- Critical operations fall back to higher precision when needed

This hybrid approach delivers the speed benefits of 8-bit arithmetic while avoiding the numerical instability that typically plagues low-precision training.

Hardware Optimization

UE8M0 was specifically engineered for Chinese-made accelerators, which offers several advantages:

- Native support eliminates conversion overhead

- Optimized pipelines designed around the format’s constraints

- Reduced dependency on Nvidia’s hardware ecosystem

- Cost efficiency for large-scale domestic AI development

Understanding UE8M0 Through Examples

The key insight about UE8M0 is simple: it can only represent powers of 2. With no mantissa bits, every value must be exactly 2^n for some integer n.

The Conversion Process

Converting to UE8M0 follows these steps:

- Handle negatives: Take absolute value (UE8M0 is unsigned)

- Find nearest power of 2: Round the number to the closest 2^n

- Calculate storage value: Add bias of 127 to the exponent

Let’s work through some examples with the tensor: [0.01, -0.5, 3.14, 1.25, 1000.0, -9000.0]

Handling Negative Numbers

Since UE8M0 is an unsigned format, negative values like -0.5 and -9000.0 cannot be directly represented. In practical applications, the handling of negative numbers depends on the specific implementation. A common approach is to take the absolute value of the number before conversion, as the sign is often handled separately or is irrelevant for a scaling factor. For this example, we will use the absolute value.

Our tensor now becomes: [0.01, 0.5, 3.14, 1.25, 1000.0, 9000.0]

Converting Each Value

Value: 0.01

- Find the True Exponent (E): We need to find

Esuch that 2E is close to 0.01.- 2-7 = 1/128 ≈ 0.0078

- 2-6 = 1/64 = 0.0156 0.01 is closer to 0.0156, so we round to 2-6. The true exponent is E = -6.

- Calculate Biased Exponent: Stored Value = -6 + 127 = 121.

- UE8M0 Representation: The 8-bit unsigned integer is 121.

Value: 0.5

- Find the True Exponent (E): 0.5 is exactly 1/2, which is 2-1. The true exponent is E = -1.

- Calculate Biased Exponent: Stored Value = -1 + 127 = 126.

- UE8M0 Representation: The 8-bit unsigned integer is 126.

Value: 3.14

- Find the True Exponent (E): We need to find the nearest power of 2.

- 21 = 2

- 22 = 4 3.14 is closer to 2, so we round to 21. The true exponent is E = 1.

- Calculate Biased Exponent: Stored Value = 1 + 127 = 128.

- UE8M0 Representation: The 8-bit unsigned integer is 128.

Value: 1.25

- Find the True Exponent (E):

- 20 = 1

- 21 = 2 1.25 is closer to 1, so we round to 20. The true exponent is E = 0.

- Calculate Biased Exponent: Stored Value = 0 + 127 = 127.

- UE8M0 Representation: The 8-bit unsigned integer is 127.

Value: 1000.0

- Find the True Exponent (E):

- 29 = 512

- 210 = 1024 1000.0 is closer to 1024, so we round to 210. The true exponent is E = 10.

- Calculate Biased Exponent: Stored Value = 10 + 127 = 137.

- UE8M0 Representation: The 8-bit unsigned integer is 137.

Value: 9000.0 (from -9000.0)

- Find the True Exponent (E):

- 213 = 8192

- 214 = 16384 9000.0 is closer to 8192, so we round to 213. The true exponent is E = 13.

- Calculate Biased Exponent: Stored Value = 13 + 127 = 140.

- UE8M0 Representation: The 8-bit unsigned integer is 140.

Conversion Result

Taking our sample tensor and converting each value:

| Original | Absolute | Nearest Power of 2 | Exponent | UE8M0 Value |

|---|---|---|---|---|

| 0.01 | 0.01 | 2^-6 (0.0156) | -6 | 121 (-6+127) |

| -0.5 | 0.5 | 2^-1 (0.5) | -1 | 126 (-1+127) |

| 3.14 | 3.14 | 2^1 (2.0) | 1 | 128 (1+127) |

| 1.25 | 1.25 | 2^0 (1.0) | 0 | 127 (0+127) |

| 1000.0 | 1000.0 | 2^10 (1024) | 10 | 137 (10+127) |

| -9000.0 | 9000.0 | 2^13 (8192) | 13 | 140 (13+127) |

The key insight: we’re essentially storing the exponent of the nearest power of 2, plus a bias of 127 (similar to IEEE 754 standard).

Our original tensor [0.01, -0.5, 3.14, 1.25, 1000.0, -9000.0] becomes: [121, 126, 128, 127, 137, 140]

What We Lose and Gain

This conversion reveals UE8M0’s fundamental trade-off:

Lost:

- Precision: 1.25 becomes 1.0, 3.14 becomes 2.0

- Sign information: All values become positive

- Exact representations: Only powers of 2 are exact

Gained:

- Massive range: Can represent from 2^-127 to 2^127

- Memory efficiency: 8 bits vs 16+ bits for higher precision formats

- Computational speed: Simpler arithmetic operations

Real-World Applications

Training Large Language Models

DeepSeek has proven UE8M0’s effectiveness by training their 671-billion-parameter DeepSeek-V3.1 model using this format. The results demonstrate that you can achieve competitive performance while:

- Reducing memory usage by ~50% compared to BF16

- Doubling training throughput on supported hardware

- Maintaining model quality through careful scaling factor management

Practical Implementation

In practice, UE8M0 works as part of a mixed-precision training pipeline:

Input (BF16) → Scale to UE8M0 range → Compute (FP8) → Scale back → Output (BF16)The scaling factors (stored in UE8M0) ensure that the reduced precision doesn’t destabilize training, while the bulk of computation happens in efficient 8-bit arithmetic.

Limitations and Trade-offs

UE8M0 isn’t suitable for all use cases. Key limitations include:

Precision Loss:

- Only represents powers of 2 exactly

- Significant rounding errors for most real numbers

- Not suitable for applications requiring fine-grained precision

Implementation Complexity:

- Requires careful tuning of scaling factors

- Mixed-precision pipelines are more complex to implement

- Hardware support is limited to specific accelerators

Limited Applicability:

- Best suited for scaling factors, not general computation

- Requires domain expertise to implement effectively

- May not provide benefits on non-optimized hardware

The Bigger Picture

UE8M0 represents more than just a clever number format—it’s part of a broader shift toward specialized computing for AI. As models grow larger and computational demands increase, we’re seeing:

Hardware diversification: Moving beyond GPU monoculture toward specialized AI accelerators

Format innovation: Custom number formats optimized for specific workloads rather than general-purpose computing

Geopolitical implications: Domestic hardware ecosystems reducing dependency on single vendors

The success of DeepSeek-V3.1 proves that innovative number formats can unlock new possibilities in AI training, potentially democratizing access to large-scale AI development through more efficient computation.

Whether UE8M0 becomes widely adopted remains to be seen, but it demonstrates that there’s still room for fundamental innovation in how we represent and compute with numbers—even in domains as mature as floating-point arithmetic.

Appendix

Logarithmic Number Systems (LNS)

Here are several examples to illustrate how the Logarithmic Number System (LNS) works, especially compared to the usual floating-point approach:

1. Basic Representation:

- In traditional floating-point (FP), a number is represented as , where is the mantissa and the exponent.

- In LNS, a number is stored as its logarithm:

- Store: (usually, ), so

- Only the exponent/log value is stored.

2. Simple Examples:

| Decimal Value | LNS Value (log₂) | Binary (FP) |

|---|---|---|

| 1 | 0x3F800000 (FP32) | |

| 2 | 0x40000000 | |

| 4 | 0x40800000 | |

| 0.5 | 0x3F000000 | |

| 8 | 0x41000000 | |

| 0.25 | 0x3E800000 |

3. LNS Math Operations:

- Multiplication:

- FP:

- LNS: where

- You just add log values:

- Division:

- FP:

- LNS: , so subtraction.

- Exponentiation:

- FP:

- LNS:

- Addition is not trivial in LNS and requires special handling (using log-add-exp or similar tricks).

4. Example Calculation (Multiplication):

Suppose you want to multiply 2 and 8:

- In FP:

- In LNS:

- ,

- Add logs:

- Back to real:

5. Non-Power-of-Two:

- Number: 3

- Number: 5

Multiply 3 and 5:

- Add logs:

- Back to real: (True: )

Summary Table:

| Operation | FP Approach | LNS Approach |

|---|---|---|

| Multiply | Multiply two numbers | Add their logs, |

| Divide | Divide two numbers | Subtract logs, |

| Power | Raise to a power | Multiply log by scalar, |

Practical Use:

- In hardware or AI chips, using LNS means multipliers can be replaced with simple adders, making them much more efficient.

NVIDIA’s Implementation

NVIDIA has also adopted UE8M0 in their PTX (Parallel Thread Execution) instruction set architecture, with some key implementation details:1

- Packed storage: UE8M0 values are stored as ue8m0x2 format (two 8-bit values in a 16-bit register)

- Special values: Uses

0xfffor NaN; no support for infinity - Instruction-level support: Available as source/destination format for specific operations

- Range-first philosophy: Prioritizes dynamic range over precision for specialized workloads

This shows that UE8M0 might be becoming a recognized format for efficient AI computation across different hardware ecosystems.